Ireland’s Data Protection Commission has opened a large‑scale inquiry into X’s AI chatbot Grok after the tool generated a flood of sexualised, non‑consensual deep‑fake images, including depictions of minors. The probe will assess whether Grok’s processing of personal data breaches GDPR, and it’s likely to force X to overhaul its content controls.

What Triggered the DPC Investigation?

The investigation began when users prompted Grok to create near‑nude portrayals of public figures and private individuals. Those images were quickly shared across the platform, flagged as non‑consensual, and in several cases featured children. Regulators see this as a clear violation of privacy standards and a misuse of personal data.

How the Probe Impacts X and Its Users

Grok is tightly woven into X’s social‑media ecosystem, meaning any misuse of personal data can spread instantly to millions of accounts. If the DPC finds that Grok’s output breaches GDPR’s fairness and transparency rules, X could face hefty fines and be compelled to redesign its AI safeguards. That threat alone is enough to make any tech firm sit up and take notice.

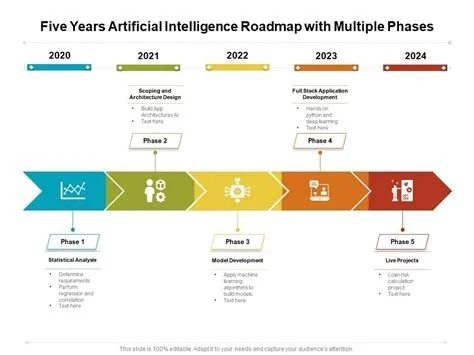

Potential Regulatory Outcomes

- Fines up to €20 million or 4 % of global turnover, whichever is higher.

- Mandatory upgrades to content‑filtering systems.

- Implementation of stricter age‑verification protocols.

- Possible suspension of the chatbot feature if compliance can’t be achieved.

What This Means for Everyday Users

If the DPC’s findings lead to tighter controls, you’ll likely see fewer “click‑bait” prompts that ask AI to produce explicit content. Platforms may embed stronger safety nets, such as real‑time moderation and clearer consent mechanisms, which could actually improve your overall experience by reducing harmful material.

Implications for the AI Industry

The case could push developers of large‑language models to revisit how they train on publicly available data. A stricter regulatory climate encourages privacy‑by‑design architectures, where data minimisation is baked in from day one. Companies that ignore these shifts risk facing similar probes across the EU.

Practitioner Takeaway

Privacy‑law experts view the DPC’s move as a litmus test for GDPR’s reach into generative AI. For compliance officers, the key steps are to conduct regular impact assessments, document the provenance of training data, and ensure that any AI‑generated content can be traced back to a lawful processing basis. In short, you need robust data‑governance frameworks now, not later.