The White House posted an AI‑generated image of civil‑rights lawyer Nekima Levy Armstrong in handcuffs, complete with exaggerated tears, sparking a heated debate about government‑driven misinformation. You’ll see how the altered photo spread across social media, why officials defended the move, and what the fallout means for trust in official visuals.

Why the AI‑Altered Image Matters

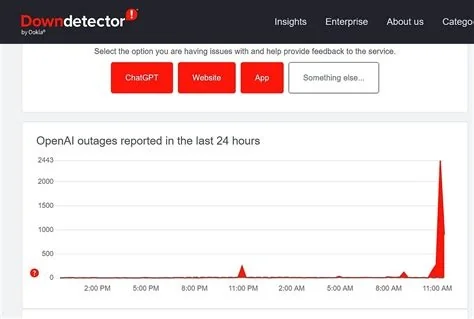

Even after a visual is debunked, its emotional punch can linger. Researchers call this the continued influence effect, where a striking picture shapes opinions more stubbornly than a dry fact‑check. The White House’s choice to use a crude, tear‑filled rendition suggests a strategic pivot: flood the information ecosystem with low‑fidelity AI content that overwhelms factual rebuttals.

Emotional Power of Manipulated Visuals

People react instantly to faces in distress. When you scroll past a crying activist, the gut response often outweighs any later correction. That instant impact is why governments and campaigns alike are experimenting with AI‑crafted imagery.

Government Response and Public Backlash

The White House’s official account initially defended the post, with a spokesperson noting, “The memes will continue.” Critics argued that the comment signaled a new willingness to weaponise low‑quality AI for political effect. The backlash grew louder after the image was compared to a sober photo released by another agency moments earlier.

Armstrong’s Critique

Nekima Levy Armstrong publicly condemned the manipulation, accusing the administration of degrading her and normalising state‑sponsored misinformation. She warned that such tactics could erode trust in legitimate protest movements and urged officials to adopt transparent visual standards.

Expert Analysis on Visual Integrity

Dr. Maya Rao, a digital forensics specialist, describes the incident as a “wake‑up call for every institution that relies on visual evidence.” She explains that the tools behind the Armstrong image are likely fine‑tuned diffusion models that run on a standard laptop, making misuse alarmingly easy.

Immediate Steps for Safeguarding Authenticity

- Mandatory metadata tagging for any AI‑generated content released by official accounts.

- Independent audit trails that log every step of image manipulation workflows.

- Public verification dashboard where citizens can check the authenticity of government‑issued visuals.

Future Outlook for AI‑Generated Government Content

As AI tools become more accessible, the line between satire, propaganda, and outright deception will keep blurring. Platforms may tighten their policies, but without clear enforcement, you could see more agencies experiment with “slop” as a soft‑power tactic. The key will be how quickly the tech community develops reliable detection tools and how willing policymakers are to hold officials accountable.