Lumen has introduced a Multi‑Cloud Gateway that lets you instantly spin up private, high‑capacity links between on‑premises data centers, public clouds, and edge locations, while its new 400 Gbps metro connections slash the latency that slows AI workloads. The solution combines a software‑defined routing layer with dedicated fiber paths, giving enterprises the speed and flexibility they need today.

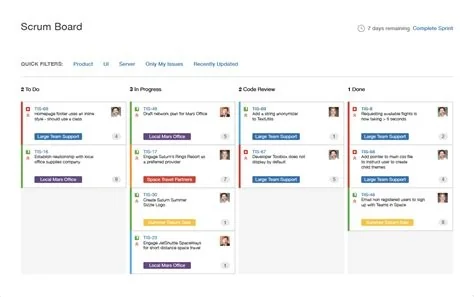

How the Multi‑Cloud Gateway Works

Self‑Service Routing and Policy Control

The gateway runs on Lumen’s global fiber backbone and offers a single pane of glass for provisioning. You can create cloud‑to‑cloud or cloud‑to‑enterprise circuits in minutes, apply traffic‑shaping rules, and monitor performance without juggling multiple vendor portals.

- On‑demand link creation – spin up connections in seconds.

- Unified policy engine – enforce QoS and security across all paths.

- Real‑time analytics – track latency, throughput, and cost.

400 Gbps Metro Links Boost AI Performance

Dedicated Metro Ethernet and IP services now deliver up to 100 Gbps between regional sites and up to 400 Gbps at flagship cloud data centers. Those bandwidth levels can shave seconds—or even minutes—off AI model training that moves terabytes of data across the network.

Industry Impact

Several sectors stand to benefit immediately:

- Financial services – faster market data feeds enable fresher algorithmic trading decisions.

- Biotech – rapid genomic data transfers accelerate research cycles.

- Media & entertainment – AI‑generated content streams with minimal buffering.

What This Means for Your Network Strategy

Traditional telco interconnects often require weeks of provisioning. With Lumen’s self‑service model, you can treat routing policies like code, version‑controlling them and embedding them in CI/CD pipelines. This shift toward “network as code” reduces operational overhead and aligns telecom capabilities with modern cloud‑native workflows.

If you’re planning to scale AI workloads or modernize hybrid environments, the new fabric offers a predictable, high‑speed foundation. It’s designed to keep pace with the next generation of foundation models, so you can focus on building value rather than wrestling with latency.