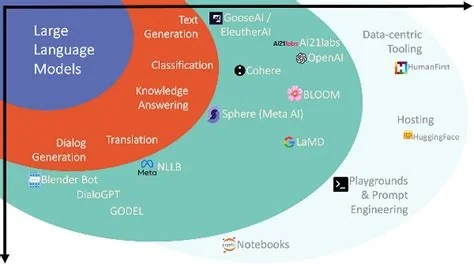

OpenAI just introduced two new security controls for ChatGPT—Lockdown Mode and Elevated Risk labels. Lockdown Mode locks the model down to cached data, cutting off live web requests, while the labels flag functions that could expose sensitive information. Together they give you a straightforward way to curb prompt‑injection attacks and tighten data‑handling policies without disabling AI assistance.

What Is Lockdown Mode?

Lockdown Mode is an optional, enterprise‑grade setting that restricts the model’s interaction with external systems. When you enable it, the AI can only retrieve information from a cached repository; any attempt to make live network calls is blocked. Features that can’t guarantee data safety are disabled automatically, creating a deterministic environment that blocks common injection pathways.

How Elevated Risk Labels Work

Elevated Risk labels appear next to specific capabilities—such as code generation or data‑intensive queries—that carry a higher chance of misuse. The visual cue prompts you to double‑check the request before proceeding, reducing the likelihood of accidental data exposure.

Key Functions That May Receive Labels

- Code generation tools that could reveal API keys.

- Live browsing features that access external sites.

- Data‑summarization modules handling confidential documents.

Enterprise Deployment Options

Lockdown Mode and Elevated Risk labels are available across all ChatGPT tiers, including Enterprise, Edu, Healthcare, and Teacher editions. Administrators can activate Lockdown Mode in Workspace Settings by creating a new role, which then layers the restrictions on top of existing role‑based access controls and audit logs.

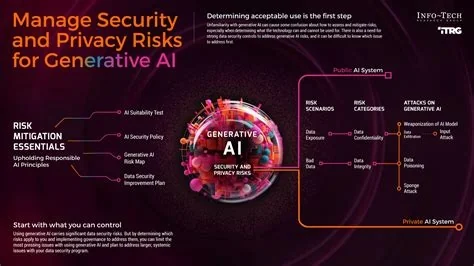

Why Prompt Injection Matters

Prompt injection has evolved from a research curiosity into a real‑world threat. A malicious prompt can trick the model into executing unintended commands or leaking sensitive data. As AI becomes embedded in workflows—think automated reports, code assistance, or customer‑support bots—the potential damage from a successful injection grows dramatically.

Benefits for Security and Compliance

These controls give security teams a clear lever to enforce stricter data‑handling postures without pulling the plug on AI productivity. For example, a finance department can keep natural‑language summarization active while disabling live browsing and code‑generation tools that might expose proprietary spreadsheets. The built‑in audit‑log integration also simplifies compliance reporting.

Practical Implementation Tips

Here’s a quick checklist to get you started:

- Identify high‑risk functions in your organization and map them to Elevated Risk labels.

- Create a dedicated “Lockdown” role for users handling confidential data.

- Test the configuration in a sandbox environment before rolling it out company‑wide.

- Educate your team on recognizing the labels and responding appropriately.

By applying these settings, you’ll reduce the attack surface for prompt‑injection threats while still reaping the productivity benefits of conversational AI.