A new health report from South Korea warns that heavy daily interaction with generative‑AI chatbots is linked to higher depression scores, and it cites a recent emergency‑room admission of a 15‑year‑old after an intense chatbot session. The findings suggest that unchecked AI use can quickly shift from curiosity to a mental‑health crisis.

Key Findings from the Korean Health Report

Depression Scores Rise with Daily AI Use

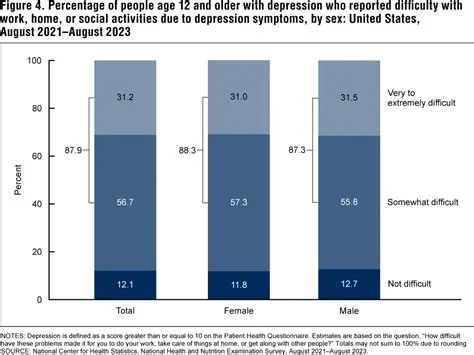

Researchers analyzed data from more than 3,000 adults and found that participants who spent over two hours per day on AI chat platforms scored, on average, 1.8 points higher on the PHQ‑9 depression scale than lighter users. The report stops short of claiming direct causation, noting that people already feeling low may turn to AI for comfort.

Age‑Related Risks Highlighted

The study emphasizes that younger users are especially vulnerable because they often treat chatbots as confidants rather than tools. This emotional attachment can amplify stress and negative thought loops.

Teen Emergency Case Highlights Risks

What Happened in the ER

In Busan, a 15‑year‑old was rushed to the hospital after a prolonged conversation with an AI chatbot left him feeling “trapped in a loop of negative thoughts.” Emergency notes recorded rapid heartbeat, disorientation, and a fleeting urge to self‑harm. After a 12‑hour observation period, he was released, but the incident sparked urgent calls for age‑appropriate safeguards.

Implications for Developers and Regulators

Designing Mental‑Health Safeguards

Tech firms are now urged to embed real‑time mental‑health warnings into user interfaces. Dynamic risk‑assessment layers can flag harmful conversational patterns and prompt users to take a break. Some platforms have already introduced “well‑being modes” that limit session length and provide links to crisis hotlines.

Regulatory Momentum Grows

Authorities are beginning to treat AI‑related mental‑health risks as a public‑health issue. New guidelines encourage transparent usage metrics and mandatory user‑education frameworks to prevent crises before they emerge.

Recommendations for Users and Caregivers

- Set clear time limits. If you spend more than two hours daily on AI chatbots, you may notice subtle mood changes.

- Use built‑in well‑being features. You can activate break reminders or switch to a low‑interaction mode when you feel overwhelmed.

- Monitor emotional responses. Keep a journal of how conversations affect your mood and share any concerning patterns with a trusted adult or professional.

- Educate young users. Teach children to treat chatbots as tools, not friends, and establish household rules for safe usage.

By combining rigorous research, thoughtful design, and proactive policy, society can keep AI as a helpful assistant without letting it become a hidden source of distress.