Samsung’s latest Arm SME2‑based CPU boost pairs a matrix‑optimized core with its upcoming HBM4 memory, promising faster on‑device AI without overheating. By integrating the new CPU into the I‑Cube horizontal packaging, Samsung aims to deliver higher bandwidth and lower latency for edge workloads, positioning its 2 nm process as a ready‑to‑scale solution for smartphones and IoT devices.

What Is the Arm SME2 CPU Boost?

The boost leverages Arm’s Scalable Matrix Extension 2 (SME2) to accelerate matrix‑heavy operations that power vision, speech and recommendation models. SME2 adds specialized instructions that let the CPU handle large tensors more efficiently, cutting compute cycles and energy use compared with traditional cores.

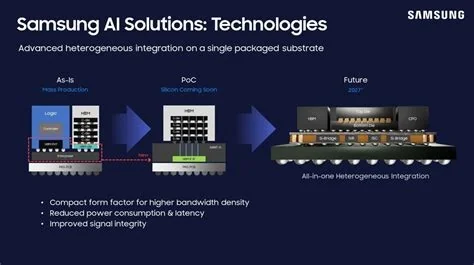

How I‑Cube Packaging Enhances Performance

I‑Cube uses parallel horizontal chip placement, which tightens floor‑planning and spreads heat more evenly across the package. This layout creates a direct, low‑latency pathway between the SME2 core and the HBM4 stack, reducing the distance data must travel.

Thermal Management and Bandwidth Gains

Because the chips sit side‑by‑side rather than stacked, the thermal envelope stays within mobile limits while still delivering over 1 TB/s of memory bandwidth. The result is a cooler device that can sustain peak AI performance for longer periods.

Impact on On‑Device AI and Edge Applications

- Richer augmented‑reality experiences on Galaxy phones.

- Faster speech‑to‑text conversion on wearables.

- More capable AI inference in industrial IoT edge boxes.

Roadmap for 2 nm AI Ramp‑Up and HBM4 Production

Samsung plans to begin mass‑producing HBM4 ahead of schedule, feeding the new CPU cores with the bandwidth they need. Sampling of Arm SME2‑based CPUs is expected in high‑volume products by mid‑year, while 2 nm AI accelerators are slated for early shipments to key partners.

What This Means for Developers and Consumers

If you’re building AI models for mobile or edge devices, the tighter CPU‑memory integration means you can run larger models locally, cutting reliance on cloud inference. For everyday users, the combination translates into smoother AI‑driven features, from real‑time translation to intelligent camera modes, without the heat or battery drain you’ve seen before.