OpenAI’s newest coding assistant, GPT‑5.3‑Codex‑Spark, runs on Cerebras’s wafer‑scale engine and delivers more than 1,000 tokens per second—about fifteen times faster than the previous Codex‑mini. The model is offered as a research preview for ChatGPT Pro users and promises near‑instant code suggestions that can keep your development flow uninterrupted and boost productivity.

Why GPT-5.3-Codex-Spark Matters for Developers

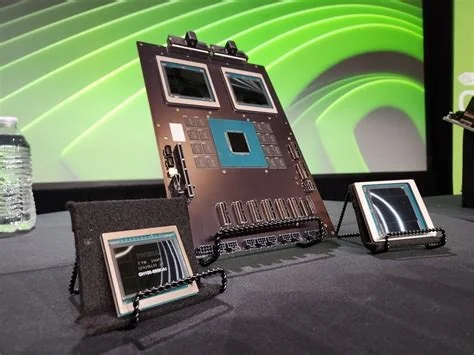

Moving a production‑grade model off Nvidia GPUs shows OpenAI is testing a diversification strategy that could lower costs and unlock ultra‑low‑latency use cases. If you need real‑time code completion in an IDE, the speed advantage can make the difference between a smooth workflow and a frustrating pause.

Speed Gains Over Previous Models

On Cerebras’s wafer‑scale engine, Spark processes just over 1,000 tokens per second, roughly fifteen times the throughput of Codex‑mini and an order of magnitude above OpenAI’s fastest Nvidia‑based inference, which tops out near 150 tokens per second. The hardware can push more than 2,000 tokens per second on larger models, so Spark’s performance is limited mainly by its size.

Trade‑offs Between Latency and Knowledge Depth

OpenAI designed Spark for speed, which means it sacrifices some depth of understanding. Benchmarks show Spark outpaces the older GPT‑5.1‑Codex‑mini in raw throughput but falls short on complex, multi‑step programming challenges compared with the full GPT‑5.3‑Codex. For many developers, the near‑instant feedback outweighs occasional gaps in nuanced reasoning.

How to Access the New Coding Model

Spark is currently available as a research preview for ChatGPT Pro subscribers ($200 / month). You can reach it through the Codex command‑line interface, a Visual Studio Code extension, or the dedicated Codex app. API access is limited to a small group of design partners, and the model remains text‑only with a 128,000‑token context window.

Practitioner Insights

“The latency was always a bottleneck when I was iterating in VS Code,” says Maya Patel, a senior software engineer who tested the early‑access program. “With Spark, the first token appears almost instantly, and the editor feels responsive enough that I can treat the model like a pair‑programmer rather than a background tool.” Patel adds that for quick scaffolding or fixing a single function, the speed win outweighs occasional missteps, though she still falls back to the full GPT‑5.3‑Codex on Nvidia for deep architectural suggestions.

Implications for the AI Hardware Landscape

The partnership signals that wafer‑scale chips can deliver tangible benefits for latency‑sensitive AI services. If the performance‑cost equation holds, more developers might opt for specialized inference hardware for niche tasks while the broader market continues to rely on traditional GPUs. Future cloud offerings could include Cerebras‑backed instances, subtly shifting competitive dynamics in the AI hardware arena.