OpenAI’s new GPT-5.3 Codex delivers a 25 % speed boost and broader reasoning, turning the model into a full‑cycle coding partner that can write, debug, deploy, and even draft documentation. You’ll notice quicker responses in interactive sessions, and the model now handles longer, more complex tasks without losing context.

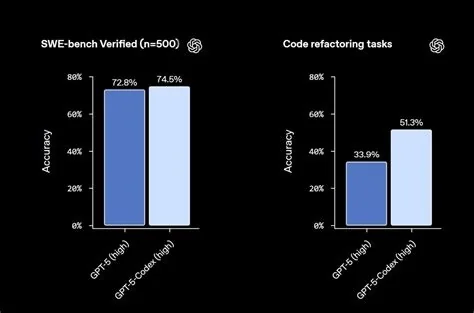

Key Performance Gains Over GPT-5.2 Codex

In internal benchmarks, GPT-5.3 Codex shattered previous records on multi‑language coding tests and terminal‑operation challenges. It outperformed its predecessor while consuming fewer tokens, meaning you can squeeze more work out of each API call.

Expanded Capabilities Across the Software Lifecycle

The upgrade shifts focus from simple code suggestions to end‑to‑end workflow support. You can ask the model to debug, manage CI/CD pipelines, analyze test results, draft product requirements, edit copy, synthesize user research, and track metrics—all within a single prompt.

Mid‑Task Steering and Context Retention

Unlike earlier versions, GPT-5.3 Codex lets you pause, request status updates, and hand off the task to a new prompt without losing context. This makes the model feel more like a teammate than a static autocomplete tool.

Available Interfaces and Integration Roadmap

Today you can access GPT-5.3 Codex through a macOS desktop app, a web UI, an IDE extension, and a command‑line client. An API for broader integration is on the roadmap, so keep an eye out for future releases.

Real‑World Impact for Developers

Early adopters report that the faster inference cuts latency in tight feedback loops, while the richer toolset reduces the need for separate utilities. One engineer noted that spinning up a CI pipeline and receiving build status from the model eliminated a tedious context‑switching step.

Practical Considerations

- Cost management: Faster inference may increase compute usage, so monitor token consumption during long sessions.

- Governance: With capabilities extending to PRD writing and user‑research synthesis, teams should revisit policies around AI‑generated content.

Future Outlook

OpenAI hints that its next general‑purpose model will follow a similar path, moving from conversational assistance to full‑stack task execution. If GPT-5.3 Codex proves reliable in production, other AI labs will feel pressure to match its agentic abilities.

In short, GPT-5.3 Codex isn’t just a faster version of its predecessor; it’s a more autonomous partner that can navigate the entire development lifecycle. Whether you’ll see measurable productivity gains depends on how quickly you integrate the new interfaces and trust the model’s broader decision‑making.