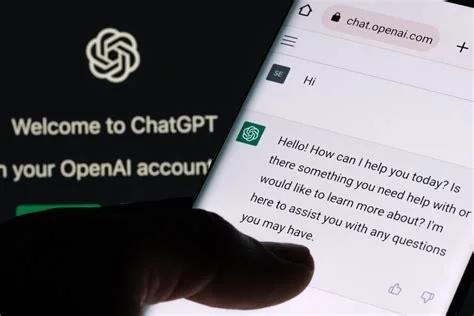

On February 3, OpenAI confirmed that ChatGPT experienced a major outage caused by two simultaneous technical problems. Users were unable to log in, the new voice‑mode failed to respond, and text generation returned incomplete answers. The disruption affected millions of consumers and enterprise customers, highlighting reliability challenges for the flagship AI service.

What Caused the Outage

OpenAI identified two active issues affecting the platform. The first issue involved backend infrastructure, likely related to server load balancing and resource allocation. The second issue impacted the voice‑mode pipeline, preventing real‑time audio interactions and causing errors in the text‑generation engine. Engineers began working on fixes immediately after the problems were reported.

Why the Outage Matters

Scale and Visibility

With tens of millions of daily users, any interruption quickly becomes high‑visibility. Both casual users and enterprise clients rely on ChatGPT for tasks such as customer‑support automation, content creation, and internal knowledge retrieval. An outage therefore hampers productivity across a broad range of workflows.

Operational Challenges

Scaling large language models in production demands low latency, high availability, and consistent quality across diverse hardware environments. The dual‑issue nature of this outage underscores the complexity of maintaining such performance at scale.

Impact on Future Releases

OpenAI is preparing a new adult‑focused variant of ChatGPT that promises greater freedom of expression and nuanced content handling. Reliability concerns highlighted by the outage could influence user confidence in upcoming releases.

Implications for the AI Market

The incident illustrates the broader industry shift toward rapid monetisation of conversational AI. Competitors aiming to capture market share may leverage OpenAI’s temporary instability to showcase more robust uptime guarantees. However, OpenAI’s swift public acknowledgment and rapid deployment of fixes demonstrate a mature incident‑management process that can mitigate reputational damage.

Practitioner Recommendations

- Implement fallback mechanisms: Use local language models or cached responses to maintain functionality during service interruptions.

- Monitor API performance: Track latency and error rates with monitoring tools to detect early warning signs.

- Plan for versioning: Follow OpenAI’s API versioning and documentation to stay informed about performance expectations and deprecation timelines.

Future Outlook

OpenAI has indicated that a fix for the current issues is in progress and will update its status page with real‑time information. As AI services become integral to both consumer experiences and enterprise workflows, maintaining uninterrupted service will be as critical as advancing model capabilities. Ensuring dependable, scalable infrastructure will be essential for the next generation of ChatGPT to deliver on its promise without further disruptions.