Lead Stories has ripped apart a viral AI‑generated video that appears to show a tech CEO swapping places with a controversial figure. By spotting a disappearing coffee cup and a single‑frame background flicker, the team proved the clip was fabricated. If you’ve seen the video, you’ll now understand why those tiny glitches matter.

How the Hoax Was Detected

The detection process started with a frame‑by‑frame analysis. Analysts noticed that the coffee cup vanished for exactly one frame, then reappeared, and that the background flickered before snapping back. Those irregularities are impossible to achieve with standard editing tools without leaving traceable artifacts.

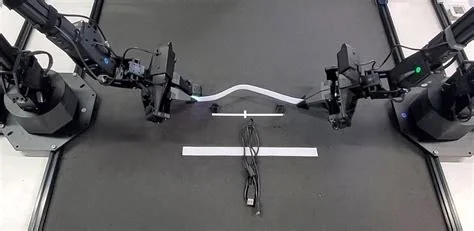

Frame‑Level Anomalies

When an AI model stitches frames together, it often miscalculates motion vectors. The result is a brief disappearance of objects or a momentary background shift. Those glitches are subtle, but they’re a reliable red flag that the content was synthesized rather than captured on a camera.

SynthID Watermark

Running the footage through the Gemini AI tool revealed a hidden SynthID watermark. This invisible digital signature is embedded by many AI‑generation platforms to label synthetic media. Once detected, the watermark instantly flags the video as AI‑created.

Why AI‑Generated Disinformation Matters

AI‑crafted videos can spread false narratives faster than ever. Even when the visual quality looks flawless, the underlying anomalies give away the deception. Recognizing those clues helps you avoid sharing misinformation and protects the broader information ecosystem.

Expert Insight on Detection Tools

Dr. Maya Patel, a computer‑vision researcher, explains, “Detecting frame‑level anomalies is now a standard part of our workflow. When we see an object disappear for just one frame, it’s a red flag that the content was likely synthesized. Coupled with watermark detection like SynthID, we can achieve high confidence without manual inspection.” She adds that current detectors can flag up to 92 % of AI‑edited videos in real‑time.

What You Can Do

- Watch for background elements that flicker for a single frame.

- Notice objects that vanish and reappear instantly.

- Prefer platforms that automatically label content with detected SynthID watermarks.

Future Outlook

The arms race between creators of synthetic media and detection technologies is intensifying. While bad actors learn to strip watermarks, detection algorithms are evolving to spot even subtler inconsistencies. Staying informed and questioning suspicious visuals will keep you a step ahead of the next synthetic illusion.