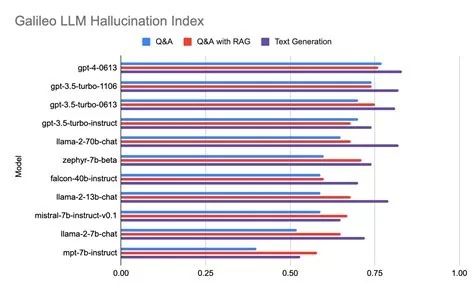

Defense teams are grappling with LLM hallucinations that can turn a single false fact into a strategic liability. Recent research shows hallucination rates ranging from under 1 % to nearly 100 % across different setups. By letting the model flag uncertainty and request a second reasoning pass, you can slash errors while keeping compute costs manageable.

Why Hallucinations Matter in Defense LLMs

In mission‑critical environments, a fabricated detail can mislead analysts, waste resources, or even endanger lives. The spread from 0.7 % to 94 % illustrates how model design, data curation, and decoding strategy all influence reliability. Understanding this variance is the first step toward a safer AI pipeline.

Uncertainty‑Aware Inference: How It Works

The core idea is simple: monitor the model’s own confidence signals and only trigger a deeper, more deliberate pass when uncertainty spikes. This selective re‑ask approach avoids the blanket overhead of double inference while still catching the most dubious outputs.

Entropy‑Aware Controller

An entropy‑aware controller watches the probability distribution of each token. When entropy crosses a predefined threshold, the system flags the response as uncertain and automatically initiates a second pass that encourages step‑by‑step reasoning.

Performance Gains

Applying this technique to a 12,000‑question benchmark lifted accuracy from roughly 61 % to over 84 % with only a 61 % increase in inference calls. A uniform re‑ask of every question achieved a marginal 0.3 % higher score, confirming that smart selection, not sheer volume, drives most of the improvement.

Practical Benefits for Defense Operations

- Operational risk management – You can embed a lightweight uncertainty monitor into existing pipelines, flagging only the most doubtful outputs for a second review.

- Compute budgeting – The 61 % overhead is far lower than the 100 % cost of naïve double inference, making it viable for on‑premise hardware with limited GPU headroom.

- Policy framing – The wide hallucination spread underscores the need for standardized reporting metrics, encouraging agencies to disclose uncertainty thresholds alongside performance scores.

Practitioner Insight

“In our testbed for tactical decision support, we saw hallucination spikes above 70 % when we let a vanilla LLM answer open‑ended queries without any confidence check,” says Dr. Maya Patel, senior AI engineer at a U.S. defense contractor. “Implementing the uncertainty‑triggered re‑ask loop cut those spikes to under 5 % while keeping latency within our real‑time budget.”

Looking Forward

The evidence positions uncertainty‑aware inference as a strong candidate for the default safety net in defense LLM deployments. Yet the so‑called alignment tax reminds us that over‑constraining a model can blunt its broader reasoning abilities. Future work will likely blend entropy signals with continual‑learning alignment methods, keeping both accuracy and safety in sync.