Deepfakes are now so convincing that even seasoned analysts struggle to tell real from fake. In the last few weeks, AI‑generated videos have mimicked faces, voices, and gestures with near‑perfect fidelity, sparking urgent calls for better safeguards. You’ll learn what makes these fakes dangerous, why existing tools fall short, and how new multimodal defenses aim to protect you.

Why Hyper‑Realistic Deepfakes Matter

Hyper‑realistic deepfakes blend visual, auditory, and textual cues, making single‑modality detection almost useless. Their rise has already eroded trust in digital communications, with scams using synthetic voices to bypass authentication and political ads blurring the line between satire and misinformation. When you receive a video that looks authentic, you can’t rely on intuition alone.

Current Detection Gaps

Most detection systems were built for still images, achieving up to 97% accuracy on static content. However, they stumble on short videos, where human observers still outperform machines—only about 63% of participants correctly identified synthetic clips compared to chance‑level algorithm performance.

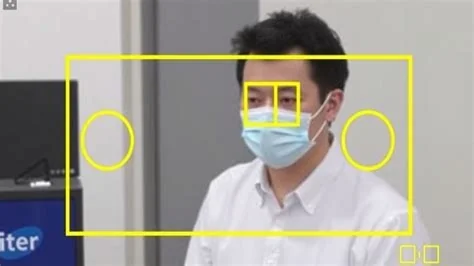

- Algorithms miss temporal inconsistencies such as mismatched micro‑expressions.

- Audio‑visual desynchronization often goes undetected.

- Background noise and lighting shifts provide clues that current models ignore.

Multimodal Defense Strategy

DeepFakeGuard’s v2 platform fuses video‑frame analysis with voice‑spectrogram comparison, targeting the subtle mismatch between facial muscle activation and speech acoustics. Early tests show 78% accuracy against the latest generation of synthetic media.

Key Features

- Real‑time verification layer that flags suspicious media as it’s uploaded.

- Combined visual and audio classifiers to catch cross‑modal anomalies.

- Human‑in‑the‑loop review to validate borderline cases.

Regulatory Landscape

Governments are introducing mandatory detection frameworks that require platforms to embed standardized verification tools. While these policies aim to curb the spread of deepfakes before they go viral, their success depends on industry adoption and the ability to keep pace with rapid model improvements.

Practitioner Perspective

Dr. Maya Singh, lead engineer at DeepFakeGuard, explains, “The most reliable signal we’ve found so far is a mismatch between facial muscle activation and the acoustic envelope of speech.” She adds, “It’s not a silver bullet, but combining AI with human review loops gives us the best chance to stay ahead.”

What You Can Do Today

If you encounter a suspicious video, pause and look for subtle cues: unnatural lip‑sync, odd lighting, or background sounds that don’t match the scene. Reporting doubtful content to platform moderators adds an extra layer of protection for everyone.

The bottom line is clear: hyper‑realistic deepfakes are no longer a novelty—they’re a concrete safety threat infiltrating finance, politics, and personal interactions. By leveraging multimodal AI defenses and staying vigilant, you can help keep synthetic media from slipping through the cracks.