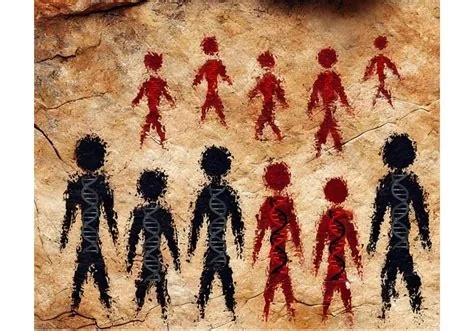

A recent study shows that popular generative‑AI tools still rely on half‑century‑old archaeology when they picture Neanderthals. Researchers asked DALL‑E 3 and ChatGPT (3.5) to create images and descriptions of daily life, and the output resembled 1960s textbook stereotypes. The findings warn you that AI‑generated visuals may reinforce outdated myths. If you trust those pictures without checking, you could spread misinformation.

Methodology: Prompt Design and Test Scale

Magnani and Clindaniel crafted four families of prompts. Two asked for generic Neanderthal scenes, while the other two demanded scientifically accurate depictions. Each prompt ran 100 times, producing 400 images, and a parallel set of 200 one‑paragraph texts. Some runs let DALL‑E rewrite the prompt for extra detail; others forced the model to stick to the exact wording.

Outdated Scientific Depictions

The AI’s output consistently echoed research from the early 1960s. When the team matched the content to a 20‑year research window, ChatGPT’s answers averaged around 1962‑64. That era portrayed Neanderthals as brutish, stooped hunters with little cultural nuance—an image modern paleoanthropology has long overturned. Symbolic behavior, diverse toolkits, and complex social structures barely appear in the generated material.

Embedded Gender Bias

Even when prompts explicitly demanded “expert‑level accuracy,” the models fell back on the old male‑hunter/female‑gatherer dichotomy. Women were shown gathering plants or caring for children, while men wielded oversized spears. This bias mirrors outdated scholarship and suggests the training data still carries skewed patterns.

Anachronistic Technological Details

Some images featured metal tools or fire‑making techniques that only emerged tens of thousands of years after Neanderthals disappeared. The researchers labeled these “implausible technological details,” highlighting how the AI can mix timelines and further distance its output from the archaeological record.

Implications for Users and Developers

Generative AI is often the first stop for students, creators, and curious readers. When the tools serve up visuals anchored in obsolete research, they risk cementing misconceptions for a new generation. Developers face a structural issue: without mechanisms to prioritize up‑to‑date sources, models will keep echoing decades‑old consensus.

Key Takeaways

- Training data matters: Current corpora lack the latest peer‑reviewed scholarship.

- Prompt engineering helps: Asking for citations or specifying a publication window can improve relevance.

- User vigilance is essential: Always verify AI‑generated content against recent research.

Recommendations for Better AI Outputs

To close the gap, developers could integrate curated academic databases into training pipelines and build “age‑grading” filters that favor recent findings. Prompt‑engineering guidelines should encourage users to request source dates or explicit references. Until those safeguards are in place, you’ll need to double‑check any AI‑produced Neanderthal image or description.