SK Hynix has become the exclusive supplier of fifth‑generation HBM3E memory for Microsoft’s in‑house AI accelerator, the Maia 200. The partnership delivers 216 GB of ultra‑fast memory across six 12‑layer stacks, enabling higher data‑throughput, lower power consumption, and a competitive edge for Azure AI workloads.

Maia 200 AI Accelerator Overview

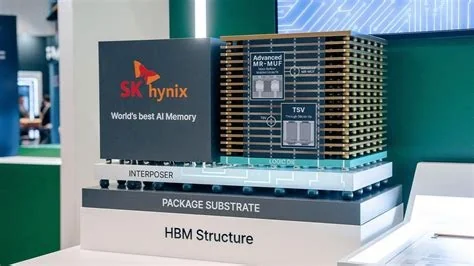

Architecture and Memory Configuration

The Maia 200 is a custom ASIC built on a 3‑nanometer process. It integrates six 12‑layer HBM3E modules from SK Hynix, providing a total of 216 GB memory and a data rate of 1.2 Tb/s per stack. This configuration supports large‑scale inference models and aims to replace traditional GPUs in select Azure services.

SK Hynix HBM3E Advantages

Performance and Power Benefits

HBM3E offers higher bandwidth and lower power draw than previous generations. Each stack contributes roughly 36 GB of capacity, delivering fast data access that reduces latency for AI inference. The memory’s efficiency aligns with the 3‑nm ASIC, improving overall energy‑efficiency metrics for cloud‑based AI workloads.

Impact on the AI Chip Ecosystem

Supply Chain and Competition

By securing a single memory partner, Microsoft streamlines integration and reduces design complexity. The exclusive deal intensifies competition among South Korean memory vendors, prompting accelerated development of next‑generation technologies such as HBM4.

Market Reaction and Outlook

Investor Sentiment

- SK Hynix shares rose sharply following the announcement, reflecting confidence in the new revenue stream.

- Competitor stocks showed modest gains, indicating broader market optimism.

Future Prospects for Microsoft and SK Hynix

Microsoft plans to expand Maia 200 deployments across additional Azure regions and explore training workloads. For SK Hynix, the exclusive contract provides a high‑visibility platform to showcase scalable, high‑bandwidth memory production, positioning the company for future ASIC contracts from cloud providers and AI vendors.