Microsoft announced on March 19 that Mustafa Suleyman, a veteran of the AI industry and co‑founder of DeepMind, will lead its newly created artificial‑intelligence venture as chief executive. The appointment, confirmed by Microsoft Chairman and CEO Sat Nadella in a brief statement from the company’s New Delhi office, underscores Microsoft’s ambition to integrate cutting‑edge generative models across its cloud, productivity, and developer platforms.

Within 24 hours of taking the helm, Suleyman issued a stark warning to the broader AI community. Speaking at a virtual summit organized by the company, he urged developers, researchers, and policymakers to place “containment” ahead of “alignment” when building increasingly autonomous systems. According to Suleyman, the industry’s current fixation on aligning AI with human values—while crucial—risks overlooking a more fundamental prerequisite: robust control mechanisms that can prevent runaway behavior before any moral or ethical goals are imposed.

Control Before Alignment

Suleyman framed his message around what he called a “Humanist Superintelligence” framework. The approach emphasizes practical, human‑centered applications—such as productivity assistance, knowledge retrieval, and decision‑support tools—while preserving human oversight at every stage of deployment. “We cannot afford to chase the holy grail of superintelligence without first ensuring we have the levers to stop it when it goes off‑track,” he said. “Control must precede alignment, or we risk creating autonomous agents that are both powerful and ungovernable.”

The call for containment reflects growing unease in the AI field about the pace of development. Since the release of large language models (LLMs) such as OpenAI’s GPT‑4 and Google’s Gemini, the industry has witnessed a surge in capabilities that blur the line between narrow task execution and more general reasoning. While many firms tout alignment research—training models to follow human instructions and avoid harmful outputs—Suleyman argues that alignment alone does not guarantee that a system will stay within intended operational boundaries.

A New Milestone for AGI

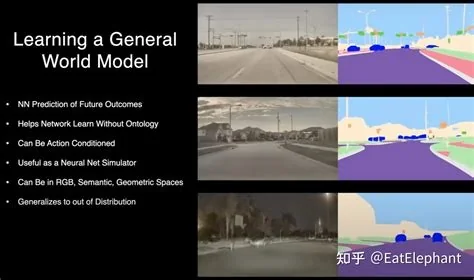

In a related commentary delivered four days after his appointment, Suleyman suggested that the next measurable milestone toward artificial general intelligence (AGI) will not be “how human‑like the output sounds,” but whether an AI agent can reliably achieve complex, multi‑step goals in real‑world environments. He cited recent experiments in which agents navigate simulated warehouses, negotiate with other bots, and adapt to changing constraints without human intervention. “If an AI can consistently plan, act, and correct its own mistakes in pursuit of a defined objective, we have crossed a more substantive threshold than mere conversational fluency,” he noted.

These remarks align with Microsoft’s ongoing partnership with OpenAI and its own internal research on autonomous agents. The tech giant has already embedded GPT‑4‑based copilots into Office, Azure, and GitHub, and it is testing “agentic” tools that can orchestrate multiple AI services to solve end‑to‑end tasks. Suleyman’s emphasis on containment suggests that future releases will incorporate tighter safety guardrails—such as real‑time monitoring, kill‑switches, and verifiable provenance of model decisions.

Background on Suleyman

Before joining Microsoft, Mustafa Suleyman co‑founded DeepMind in 2010, later serving as its head of product and policy. He left DeepMind in 2019 to launch Inflection AI, a startup focused on building “personal AI assistants” that communicate in natural language. Inflection’s rapid fundraising rounds—culminating in a $1 billion valuation—caught the attention of major cloud providers, leading to Microsoft’s acquisition of the company’s core talent and technology in early 2024. Suleyman’s track record of bridging cutting‑edge research with productisation makes him a logical choice to steer Microsoft’s AI ambitions.

Industry Implications

Suleyman’s containment‑first stance arrives at a moment when regulators worldwide are drafting AI governance frameworks. The European Union’s AI Act, the United States’ AI risk‑management guidelines, and various national AI strategies all grapple with the balance between innovation and safety. By publicly championing control mechanisms, Microsoft positions itself as a proactive participant in shaping those regulations, potentially influencing standards for model interpretability, auditability, and shutdown protocols.

Competitors are likely to watch Microsoft’s next moves closely. OpenAI, Anthropic, and Google DeepMind have each highlighted alignment research in recent blogs and papers, but few have foregrounded containment in public discourse. If Microsoft integrates stringent containment tools into its Azure AI services, enterprise customers may favor the platform for compliance reasons, accelerating Microsoft’s market share in AI‑enabled cloud workloads.

Challenges Ahead

Implementing effective containment is technically complex. “Control” can mean anything from limiting a model’s output length to enforcing hard constraints on actions taken in a physical or digital environment. Designing these safeguards without stifling useful functionality requires nuanced engineering and deep interdisciplinary collaboration—a challenge Suleyman acknowledges. “We must avoid over‑engineering that renders AI useless, but we also cannot ship systems that act beyond our ability to intervene,” he said.

Moreover, the call for a “Humanist Superintelligence” raises philosophical questions about whose values define “human‑centered.” As AI systems become more autonomous, the definition of oversight may shift from direct human control to supervisory oversight via metrics and dashboards, a transition that could blur accountability lines.

Looking Forward

Suleyman’s early tenure will likely be marked by the rollout of pilot projects that embed containment mechanisms into Microsoft’s flagship AI products. Observers anticipate announcements around AI agents capable of orchestrating multi‑modal tasks while offering transparent logs for administrators to review and, if necessary, terminate operations.

Whether this “containment before alignment” paradigm gains traction across the industry remains to be seen. What is clear is that Microsoft, under Suleyman’s leadership, is positioning itself at the forefront of the debate on how—and whether—society can safely usher in the next generation of intelligent machines.

This topic is currently trending in Technology.