Microsoft’s new Maia 200 AI inference chip delivers over 10 petaFLOPS in 4‑bit precision and integrates native FP8/F4 tensor cores on a 3 nm process. Designed for Azure, the accelerator powers services such as Microsoft 365 Copilot and Azure OpenAI, offering up to 30 % better performance‑per‑dollar and reducing reliance on Nvidia GPUs.

Maia 200 AI Inference Chip Overview

Key Technical Specifications

- Transistor count: more than 140 billion per die

- Precision performance: >10 petaFLOPS at FP4, >5 petaFLOPS at FP8

- Power envelope: 750 W TDP

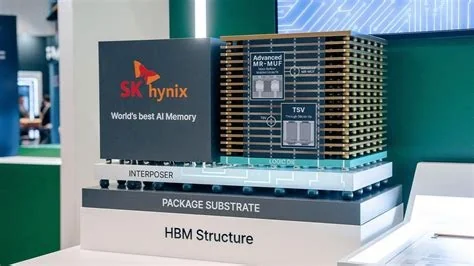

- Memory bandwidth: 216 GB HBM3e stack delivering 7 TB/s

- On‑chip storage: 272 MB SRAM with dedicated data‑movement engines

Performance and Cost Advantages

Benchmark Comparisons

- Approximately three times the FP4 performance of Amazon Trainium 3

- FP8 performance surpasses Google TPU v7

- Up to 30 % higher performance‑per‑dollar versus current data‑center GPUs

Deployment Strategy and Developer Tools

Azure Region Rollout

The first Maia 200 units are live in the US Central Azure region near Des Moines, with expansion to US West 3 near Phoenix scheduled next.

Maia SDK Features

- PyTorch bindings for seamless model integration

- Triton compiler and optimized kernel library

- Low‑level programming language for fine‑grained control

- Tools to simplify porting across heterogeneous accelerators

Strategic Rationale for Microsoft’s Custom Silicon

Reducing Nvidia Dependence

By building Maia 200, Microsoft aims to lower its reliance on Nvidia GPUs for large‑scale inference, securing greater control over performance, cost, and supply‑chain dynamics.

Competitive Landscape

The chip positions Microsoft alongside other hyperscalers developing proprietary AI accelerators, intensifying competition with Nvidia, Google TPUs, and Amazon Trainium.

Market Impact and Future Outlook

Potential Benefits for Azure Customers

When broader access is enabled, customers can expect lower operating costs and higher inference throughput for workloads such as Copilot and synthetic‑data pipelines.

Roadmap and Expansion Plans

Microsoft plans to extend Maia 200 across additional Azure regions and continue iterating on the silicon, reinforcing its role as a leading AI‑hardware provider.