The momentum behind open-source AI has never been stronger. With powerful models available through tools like Ollama and versatile workflow platforms like n8n, businesses have the capability to deploy highly customized, private AI systems. However, turning this potential into a stable, scalable reality requires significant infrastructure work. Organizations find themselves managing a complex stack: a local Ollama server for various Ollama models, an instance of open webui for interaction, and a separate, n8n self hosted environment for automation. This fragmented approach—often requiring secure access solutions like Cloudflare tunnels—is the very definition of technical debt.

A new breed of integrated platform is emerging, from organisations such as New Zealand company, Black Sheep Ai (baa.ai) to address this challenge, offering a private AI solution that entirely replaces the need for custom-built n8n server and self-managed model deployments. By consolidating the best elements of open-source and proprietary technology into a single, managed service, these platforms are radically simplifying the path to enterprise-grade private AI.

Consolidating the Code-Automation Stack

The core struggle for many businesses is the synchronization between code, models, and automation. Developers using sophisticated IDEs like Cursor rely on high-quality code assistance, which is dramatically improved when the LLM has deep, private context. Similarly, creating automated processes requires the reliability of n8n.

When you choose to go the n8n self hosted route, you gain control but lose simplicity. A managed solution, on the other hand, handles the deployment and scaling of the core engine, offering what can be described as an “n8n mcp” (Model Context Protocol) experience. This protocol allows the AI itself to understand and manipulate the workflow engine, turning natural language requests into complex automation flows. Instead of manually configuring an n8n sequence, a user can simply instruct the system—perhaps leveraging the reasoning power of an integrated model like Claude—to build a multi-step process for data handling or internal communication.

This unification transforms the concept of an “n8n self hosted ai starter kit” from a complex assembly project into an instantly available service.

Generative AI: From Desktop to Enterprise Privacy

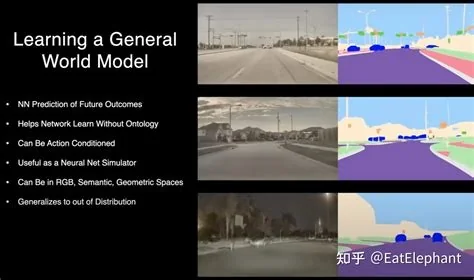

The shift toward integration is equally important in the realm of generative media. Tools like Stable Diffusion and its powerful, node-based companion, ComfyUI, have changed content creation forever. However, moving a high-performance Stable Diffusion self hosted setup from a single machine to a secure, shared enterprise environment is costly and technically demanding.

A managed private AI platform eliminates this barrier. It provides the same powerful, customizable image generation capabilities—effectively offering a private, high-throughput ComfyUI backend—that can be accessed securely by everyone in the organization.

The key benefit here is privacy. When generating proprietary marketing images or confidential internal documents, the data never leaves the secure, isolated environment. This is a crucial answer to the privacy concerns that dominate much of the AI news today.

The Future of Infrastructure

The current era of AI innovation is defined by the tension between power and simplicity. Users want the powerful, cutting-edge results delivered by Ollama models and the sophisticated reasoning of services like Claude. They want the deep automation of n8n. But they cannot afford the perpetual maintenance and security risks associated with piecing together an entire infrastructure from scratch.

By providing a single, integrated, and private solution that obviates the need for juggling separate Ollama deployments, n8n servers, and Stable Diffusion setups, these next-generation platforms are paving the way for wider, more secure, and more productive enterprise AI adoption. The future is not about self-hosting individual components; it’s about consuming AI as a private, integrated utility.