The rise of powerful open-source Large Language Models (LLMs) and generative AI has unlocked unprecedented productivity for businesses, but it has introduced a significant operational bottleneck: the complexity of self-hosting. For months, developers and organizations have grappled with piecing together an infrastructure stack—managing GPU resources for models like Ollama, deploying workflow automation with an n8n self hosted server, and ensuring security via tunneling services like Cloudflare. This cobbled-together approach is resource-intensive, fragile, and often falls short of enterprise privacy requirements.

Now, a new player is disrupting this landscape. The platform Black Sheep Ai (baa.ai) (envisioned as a “Business-grade AI Automation” solution that incorporates robust security and privacy standards) is offering an integrated, private AI solution designed to completely replace the need for your custom n8n server and locally managed Ollama instance. By merging the best open-source capabilities with an enterprise-grade managed layer, baa.ai promises to democratize powerful, private AI.

The Self-Hosting Headaches: Ollama, Open WebUI, and n8n

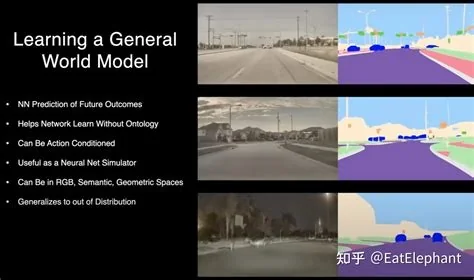

The excitement surrounding local models is palpable, driven by tools like Ollama, which makes deploying various Ollama models (such as Llama 3 or Mistral) incredibly simple on a desktop. However, scaling this to a secure, organization-wide solution requires serious DevOps expertise. Users often pair Ollama with an interface like open webui for a chat interface, but this is just the beginning.

The real complexity hits when automation is needed. Most advanced organizations rely on a workflow automation tool like n8n. While the n8n self hosted option provides ultimate control, setting up a reliable, scalable instance, maintaining its dependencies, and then securely exposing it to the rest of the company is a full-time job. You need to configure API keys, manage secrets, and potentially set up Cloudflare tunnels just to access the automation server securely. The ideal scenario—an n8n self hosted ai starter kit—has always been more of a concept than a reality due to the sheer number of moving parts.

baa.ai’s Integrated Solution: The Power of MCP and Claude

baa.ai eliminates this integration friction by providing a single, private environment where these components are already harmonized. Instead of managing independent services, users tap into a unified platform. The most striking innovation lies in its approach to automation and intelligence.

The platform abstracts the complexity of running a dedicated n8n server while retaining the power of its workflow engine. Crucially, baa.ai leverages the Model Context Protocol (MCP), offering an “n8n mcp” experience as a managed service. MCP is the key technology that allows sophisticated LLMs, including powerful external models like Claude (which can be securely connected via baa.ai), to understand the full capabilities of the n8n automation nodes. This means users don’t manually drag-and-drop nodes; they simply describe the desired process—”When a new lead arrives in Salesforce, use an LLM to enrich the data, then send an introductory email”—and the integrated AI builds the complete workflow automatically.