Enterprise leaders can now watch a focused 84‑minute session that breaks down cloud versus on‑premise GPU strategies for generative AI. Hosted by Koenig Solutions and led by trainer Akwinder Kaur, the webinar delivers a data‑driven framework to help decision‑makers choose the optimal deployment model for large‑scale language models and other AI workloads.

Key Takeaways from the Generative AI GPU Webinar

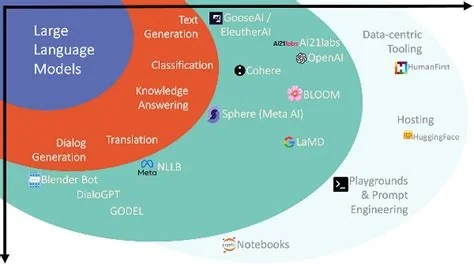

Evaluating Cloud GPU Options

The webinar highlights Microsoft Azure’s pay‑as‑you‑go GPU portfolio, including the ND‑A100 series, as a flexible entry point for organizations that prefer minimal upfront capital. Cloud GPUs offer rapid scaling, global reach, and elastic pricing, making them ideal for experimental projects and bursty workloads.

Assessing On‑Premise GPU Deployments

On‑premise clusters are presented as a long‑term investment that can lower per‑inference costs and provide tighter control over proprietary data. This model is especially attractive for regulated industries that demand low latency and strict data‑sovereignty compliance.

Decision Framework for Enterprises

Total Cost of Ownership

- Cloud: Operational expense (OPEX) model with usage‑based pricing.

- On‑Premise: Capital expense (CAPEX) upfront, but reduced ongoing compute fees.

Latency and Performance

- Cloud solutions may introduce network latency for data‑intensive tasks.

- On‑premise clusters place compute closer to data sources, minimizing latency for real‑time applications.

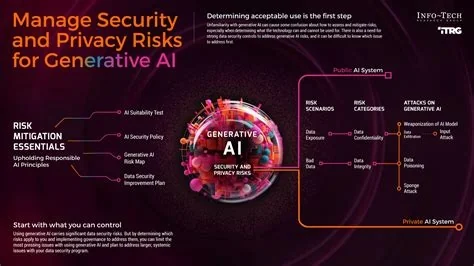

Security and Compliance

- On‑premise deployments simplify adherence to data‑sovereignty regulations.

- Azure’s confidential compute and encrypted workloads aim to bridge security gaps for cloud users.

Hybrid Strategy and Next Steps

Combining Cloud Burst Capacity with On‑Premise Baseline

Many enterprises benefit from a hybrid approach: use cloud GPUs for experimental and peak demand workloads while maintaining a baseline of on‑premise capacity for production‑grade inference. This balances cost efficiency, performance, and compliance.

Actionable Checklist for Leaders

- Quantify multi‑year total cost of ownership for each deployment model.

- Map latency requirements to specific workload profiles.

- Align security policies with the chosen infrastructure, considering both on‑premise controls and cloud encryption features.

- Plan for hybrid integration to leverage the strengths of both environments.