Bar associations and courts are rapidly implementing stricter rules for AI‑generated legal documents after a surge of fabricated citations and false references—known as “hallucinations”—began appearing in filings. New guidelines demand verification, transparency, and competence, while courts are ready to sanction attorneys who submit inaccurate AI‑produced content and risk undermining judicial integrity.

Why Courts Are Targeting AI Hallucinations

Judges have reported an increasing number of filings that contain non‑existent cases, invented quotes, and misleading legal arguments produced by generative AI tools. These errors threaten the accuracy of the record, waste judicial resources, and can prejudice case outcomes, prompting courts to act decisively.

High‑Profile Errors Trigger Action

A recent courtroom incident revealed a citation to a case that did not exist, exposing how AI can fabricate legal references. The discovery sparked immediate concern among judges, who warned that unchecked AI use could flood the system with unreliable information.

Bar Association Guidance and New Responsibilities

State bar associations are issuing formal advisories that outline attorneys’ duties when using AI. The guidance emphasizes three core principles: accuracy, transparency, and confidentiality.

State Bar Advisories

- Accuracy: Lawyers must verify every AI‑generated citation and quotation before filing.

- Transparency: Any use of AI in legal research or drafting must be disclosed to clients and, when appropriate, to the court.

- Confidentiality: Attorneys should avoid uploading sensitive client data to open‑source AI platforms.

Prohibitive State Measures

- Some states have banned AI for translating legal forms, court orders, or any content that could affect case outcomes.

- Additional checkpoints require attorneys to document verification steps and retain original sources.

Court Sanctions for AI‑Generated Falsehoods

Courts are adapting existing procedural rules to penalize submissions that contain AI‑generated inaccuracies. Sanctions are applied under professional‑responsibility standards and procedural provisions that address false statements.

Sanction Framework

- Reduced penalties when attorneys voluntarily acknowledge AI errors and correct the record.

- Severe penalties for repeated or deliberate misuse of AI, especially when false citations are numerous.

- Sanctions may include monetary fines, mandatory training, or referral to disciplinary boards.

Global Scope of AI Hallucination Issues

Recent research shows that AI‑generated hallucinations are not confined to one jurisdiction. Analyses of hundreds of cases worldwide reveal a consistent pattern of fabricated citations and misleading reasoning, confirming the need for universal safeguards.

Judicial Expectations for AI Evidence

Judges are beginning to treat AI outputs as secondary sources that must meet the same evidentiary standards as human‑generated material. They require clear provenance, verifiable timestamps, and, when possible, supporting audio or documentary records before granting weight to AI‑produced evidence.

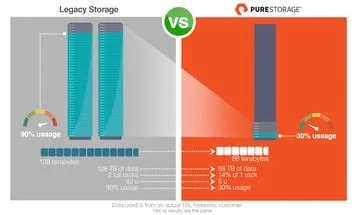

Impact on Legal‑Tech Vendors

The tightening regulatory environment creates both challenges and opportunities. Vendors that embed verification layers, provenance tracking, and secure data handling into their AI tools are likely to gain favor with risk‑averse law firms and courts. Conversely, providers of opaque or open‑source models may see reduced adoption.

Future Outlook for AI Regulation in Law

Bar associations and courts are expected to continue refining AI policies, with upcoming advisories and legislative drafts that will codify verification requirements for all filings. As the legal profession balances efficiency gains with the imperative for factual accuracy, rigorous oversight will remain essential to protect the integrity of the justice system.