Anthropic’s Model Context Protocol (MCP) provides a universal, open‑source connector that lets any large language model link directly to databases, CRMs, wearables and other enterprise services. By replacing thousands of point‑to‑point integrations with a single standard, MCP streamlines development, cuts costs, and accelerates AI‑driven business workflows and enables rapid scaling across teams.

Why MCP Solves the AI Integration Chaos

Before MCP, each new AI model required a separate integration for every data source, creating an exponential “N × M” problem that overwhelmed engineering teams. MCP consolidates these connections into one reusable interface, eliminating redundant code and reducing error‑prone custom adapters.

From N×M Problem to a Single Connector

MCP defines three core capabilities—Tools, Resources, and Prompts—that any compliant model can consume through a single connector. This unified approach lets developers focus on product differentiation rather than repetitive integration plumbing.

Key Capabilities of the Model Context Protocol

- Tools – Functions the model can call, such as database queries or API actions.

- Resources – Contextual data like documentation, user preferences, or device telemetry.

- Prompts – Templated instructions that guide model behavior and output formatting.

How MCP Works in Practice

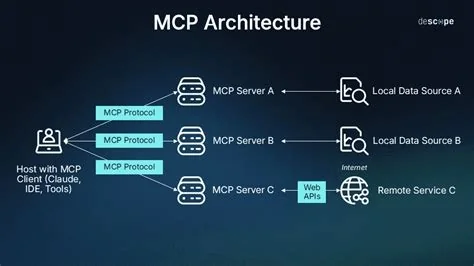

MCP follows a client‑server model. An AI application (the client) queries an MCP server for available Tools, invokes a function with parameters, and receives a structured response. This decouples the scaling of AI models from the scaling of backend services.

Three‑Stage Flow: Discovery, Invocation, Response

During Discovery, the client retrieves the catalog of Tools and Resources. In the Invocation stage, it calls a specific Tool with the required arguments. Finally, the server returns a formatted Response, enabling seamless integration without custom code.

Recent Milestones Demonstrating Maturity

Since its launch, MCP has been adopted by major AI platforms and is governed as an open‑source project under the Agentic AI Foundation. Broad client support now includes leading models and development environments, reinforcing MCP’s status as a community‑driven standard.

Open‑Source Governance and Broad Platform Support

The protocol’s stewardship by a neutral foundation ensures transparent evolution, while first‑class client libraries are available for popular AI platforms, IDEs, and orchestration tools.

Transport Improvements Enable Scalable Deployments

Initial local STDIO connections have been superseded by a streamable HTTP transport, allowing remote, distributed deployments that support load‑balancing, fault tolerance, and serverless architectures.

MCP Apps Bring Interactive UI to Conversations

The new MCP Apps extension lets tools return interactive UI components—dashboards, forms, visualizations—directly within a conversational flow, turning static responses into actionable interfaces.

Benefits for Developers and Enterprises

By eliminating custom adapters, MCP dramatically shortens development cycles, reduces operational overhead, and improves security through a single audited integration layer.

Accelerated Development Cycles

Teams can connect any LLM to business tools with one integration point, turning AI‑powered insights into baseline features without reinventing connectors for each new model.

Unified Security and Compliance Layer

A centralized MCP server enforces authentication, rate limiting, and data‑privacy policies for all downstream models, simplifying compliance and enhancing overall security posture.

Future Outlook for MCP

Roadmap initiatives focus on stateless, serverless transport mechanisms and richer multimodal interactions. As MCP continues to evolve, it aims to become the universal “new API” that lets any future LLM plug into existing digital ecosystems with the ease of a single USB‑C cable.