Apple is fast‑tracking three AI‑powered wearables that will extend Siri’s capabilities beyond the phone. The lineup includes smart glasses, an AI‑enabled pendant, and AirPods with built‑in cameras, all designed to see and listen around you. These devices aim to deliver hands‑free, context‑aware experiences that blend vision and audio in everyday accessories.

What Apple Is Building

Smart Glasses

The smart glasses, internally codenamed N50, feature a high‑resolution camera and a secondary sensor that streams visual data to Siri. You’ll interact primarily through voice, asking Siri to identify objects, translate text, or guide you to a destination. The frames are crafted from premium materials and house an embedded battery for all‑day use.

AI‑Enabled Pendant (AI Pin)

The AI pin is a discreet pendant that includes a low‑resolution, always‑on camera and a built‑in microphone. It continuously supplies visual context to Siri, allowing the assistant to respond to your surroundings without you having to lift a finger. If Apple moves forward, the pin could reach consumers soon.

AI‑Powered AirPods

Apple’s next‑generation AirPods will integrate a tiny camera, turning the earbuds into a visual AI platform. This addition lets the devices recognize nearby objects, provide live translation, and trigger context‑aware reminders—all while delivering the familiar audio experience you expect.

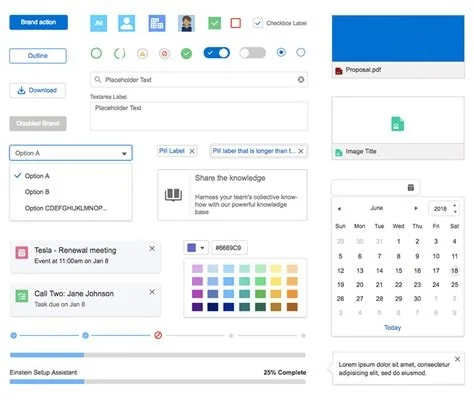

Key Features Across the Lineup

- Multimodal Siri: Combines voice, vision, and audio for richer interactions.

- Seamless Ecosystem Integration: All devices sync effortlessly with the iPhone.

- All‑Day Battery Life: Optimized power management keeps the wearables running when you need them.

- Premium Build: High‑quality materials ensure durability and a sleek look.

Why Apple Is Accelerating AI Wearables

Apple sees a clear opportunity to embed generative AI into hardware that people wear every day. By adding cameras and sensors, Siri evolves from a voice‑only assistant to a multimodal companion that can answer “What’s this plant?” or “How far is that building?” without you tapping a screen. It’s a natural next step after the Apple Watch’s health sensors.

Impact for Developers and Users

For developers, the new sensor suite opens a wave of APIs that blend audio, vision, and on‑device AI. You’ll be able to create apps that offer health insights, navigation aids, and lightweight AR experiences—all while keeping data processing secure on the device.

For everyday users, the wearables promise a more intuitive way to interact with technology. Imagine getting instant translations while walking down the street, or having Siri point out landmarks as you explore a new city—all without pulling out your phone.

What to Expect Next

Apple hasn’t released official specifications yet, but the engineering focus is clear: deliver reliable performance, maintain battery life, and ensure the devices feel natural in daily use. Keep an eye on upcoming announcements, because the rollout of these AI wearables could reshape how you experience the Apple ecosystem.