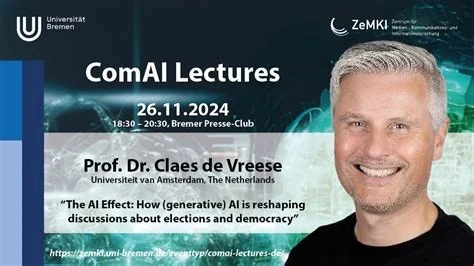

Waseda University is set to explore how generative AI reshapes political campaigns when Professor Claes de Vreese delivers a research talk on AI‑driven election threats. The session will unpack AI‑crafted ads, misinformation tactics, and emerging safeguards, giving you a clear view of what’s at stake for voters and democracy.

Why Generative AI Is a Game‑Changer for Elections

Large‑language models can produce hyper‑personalised political content in seconds, turning data into persuasive narratives that feel tailor‑made for each voter. This speed and scale mean that misinformation can spread faster than traditional fact‑checking methods, challenging the integrity of electoral discourse.

Core Questions de Vreese Will Tackle

- Weaponisation: How are AI tools being used to create ultra‑targeted political ads?

- Regulation: What safeguards can regulators and platforms implement without silencing legitimate speech?

- Measurement: How can scholars accurately assess AI‑driven misinformation’s impact on voter behaviour?

Potential Safeguards and Transparency Measures

Experts suggest mandatory attribution for AI‑generated political content, similar to campaign finance disclosures. By tagging each AI‑crafted post with its data source, platforms could give voters a quick way to verify authenticity. You’ll likely see footnotes like “Source: public archive” appear beneath political memes and videos.

Balancing Free Speech and Protection

Any safeguard must walk a fine line—over‑regulation could choke genuine political expression, while under‑regulation leaves the door open for deceptive campaigns. Ongoing dialogue between technologists, policymakers, and scholars aims to find that sweet spot.

Implications for the Average Voter

When platforms adopt clear attribution, you might start seeing source labels on every AI‑generated political post. This could make it easier to trace misinformation back to its origin, but the real test is whether users actually read those notes. The effectiveness of such measures will depend on how prominently they’re displayed and how engaged you are with the content.

What You Can Do Today

- Look for source tags on political content and question posts that lack them.

- Support platforms that prioritize transparency and robust fact‑checking tools.

- Stay informed about emerging AI capabilities that could influence future elections.

Looking Ahead

De Vreese’s talk signals a shift from theoretical debate to actionable strategies. By bringing together academia, industry, and policy makers, Waseda hopes to spark concrete steps toward protecting democratic processes from AI‑driven manipulation. Keep an eye on the post‑talk Q&A livestream for real‑time insights and your chance to ask pressing questions.