OpenAI has just filed a formal complaint that Chinese startup DeepSeek is copying its advanced language‑model capabilities through a process called model distillation. The allegation claims DeepSeek trains a smaller chatbot on OpenAI’s output, sidestepping safeguards and offering the result for free. This move could undercut U.S. AI businesses and raise security concerns.

How Model Distillation Works

Model distillation lets a compact AI learn from the responses of a larger, more powerful system. By feeding the big model’s outputs into a smaller one, developers can create cheaper versions that mimic the original’s performance.

Distillation Process Explained

- Data Collection: The smaller model gathers large volumes of text generated by the larger model.

- Training Loop: It iteratively adjusts its parameters to reproduce those outputs.

- Optimization: Engineers fine‑tune the distilled model to run efficiently on modest hardware.

Why OpenAI Is Raising the Alarm

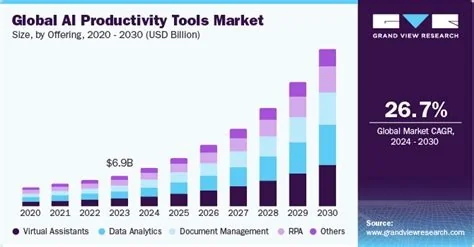

OpenAI argues that the practice isn’t just a technical shortcut; it threatens the commercial and safety foundations of the industry. When a competitor reproduces capabilities without bearing the massive compute costs, the original provider’s revenue stream dries up.

Economic Impact

U.S. firms have invested billions in compute clusters and data pipelines. If you’re a developer relying on subscription revenue, seeing a free alternative that mirrors your product can erode your market share overnight.

Security Risks

Distilled models often inherit the original’s strengths—and its weaknesses. Without the original’s built‑in safeguards, the copy can be misused in high‑risk areas like bio‑engineering or chemical synthesis. That’s why OpenAI warns policymakers to treat illicit distillation as a national‑security concern.

Potential Policy Responses

Lawmakers could consider several steps to curb unauthorized distillation:

- Strengthen export controls on high‑performance AI hardware and software.

- Mandate watermarking of model outputs to make unauthorized copying easier to detect.

- Impose stricter rate limits and monitoring on API usage.

- Require clear terms of service that prohibit using outputs for competing products.

What This Means for AI Developers

If you’re building or using large language models, you’ll need to balance openness for legitimate research with robust enforcement mechanisms. Expect tighter access controls, more aggressive monitoring, and possibly new licensing requirements. Staying ahead means you’ll have to protect your data pipelines as fiercely as you innovate your models.