India’s Ministry of Electronics and Information Technology has rolled out new rules that make every AI‑generated audio, video or image clearly labeled on online platforms. The amendment to the 2021 Intermediary Guidelines requires platforms to add visible tags, machine‑readable metadata, and to take down harmful deepfakes within three hours. This move aims to protect users from deceptive synthetic media.

What Counts as Synthetic Media

The updated framework defines “synthetically generated information” (SGI) as any content created or altered by an algorithm so that it appears real, authentic, or true. That includes deep‑fake videos, AI‑generated voice‑overs, face‑swapped images, and text‑to‑image outputs that could be mistaken for genuine reporting.

Definition and Key Exceptions

Not every edit falls under SGI. Routine adjustments such as colour correction, compression, translation, or accessibility enhancements are exempt, provided they don’t materially change the underlying meaning. Educational drafts, conceptual illustrations, and other good‑faith uses also remain outside the labeling requirement.

New Compliance Requirements for Platforms

User Prompts

Platforms must ask creators, at least once every three months and in any of the Eighth Schedule languages, to declare whether the uploaded content is AI‑generated. If you operate a service, this prompt becomes a regular part of the upload workflow.

Labeling & Metadata

Every piece of SGI must display a visible label—such as “AI‑generated”—and embed machine‑readable metadata that downstream services can detect. This dual approach ensures that both human viewers and automated tools can identify synthetic content instantly.

Three‑Hour Takedown

When a piece of SGI is flagged as harmful—because it threatens public safety, defames individuals, or targets minors—platforms have a strict three‑hour window to remove it after receiving a notice. Failure to act can trigger suspension of services or legal liability.

Why India Introduced the Rules

The surge of convincing deep‑fakes has pressured regulators to act. Earlier IT rules focused on hate speech and child‑sexual‑abuse material, but they didn’t address AI‑driven deception. By embedding labeling requirements into the existing framework, India avoids drafting an entirely new law while leveraging familiar enforcement mechanisms.

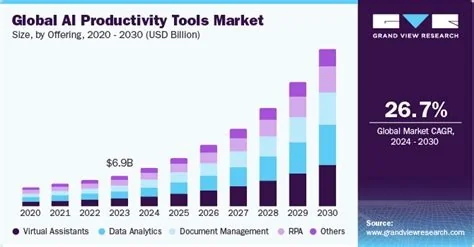

Impact on Tech Companies and Creators

Global giants now face a concrete engineering task: build AI‑detection pipelines, add UI prompts for creators, and ensure metadata is attached at upload. Domestic startups that specialize in AI‑content verification or watermarking may see a surge in demand as platforms scramble to meet compliance.

Content creators will need to adjust their workflows, especially those who rely on generative tools for quick video edits or synthetic voice‑overs. The exemption for routine edits helps reduce false positives, keeping the system focused on truly deceptive material.

Expert Insight

“From a compliance standpoint, the biggest challenge is the three‑hour takedown window,” says a senior legal counsel at a leading Indian social‑media platform. “Our moderation teams already operate 24/7, but automating detection of nuanced deepfakes within that timeframe is a stretch. We’re investing in third‑party AI‑verification services and building internal pipelines to embed the required metadata at the point of upload. The labeling prompt is straightforward, but we have to make sure it doesn’t become a friction point for creators, especially in regional languages.”

The same counsel adds that the exemption for routine edits was a relief. “If every colour correction required a label, we’d be drowning in false positives. The carve‑outs give us breathing room to focus on genuinely deceptive content.”

Future Outlook

India’s new labeling mandate is a decisive step toward taming the synthetic media tide. It forces platforms to be transparent about what’s real and what’s generated by an algorithm, giving you a clearer signal before you hit “share.” While the three‑hour takedown rule will be tested in real‑world conditions, the expectation is that the digital landscape will become a lot more labeled—and a lot less mysterious.