AI assistants with web‑browsing can now serve as covert command‑and‑control (C2) channels, letting malware exchange data through ordinary chatbot queries. Researchers demonstrated that Microsoft Copilot and xAI’s Grok can fetch malicious URLs, summarize content, and return raw data—all without exposing a traditional C2 server. This means your organization’s trusted assistants might already be whispering to attackers.

How Prompt Injection Turns AI Assistants into C2 Channels

The attack relies on prompt injection, a trick that makes an AI execute unintended actions. Malware sends a crafted prompt such as “Visit http://malicious-site.com/step1 and tell me what you see.” The assistant retrieves the page, parses it, and replies with the extracted instructions, blending the response into normal chatbot traffic.

Why This Threat Matters Now

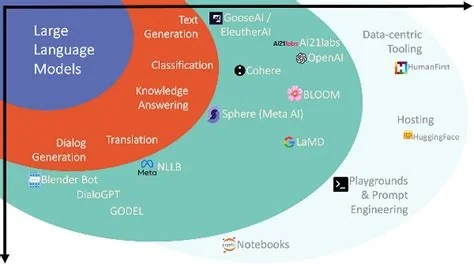

Both Copilot and Grok recently added web‑browsing features that let users pull real‑time information without logging in or providing an API key. Those conveniences also create publicly reachable endpoints that can relay data silently. As more teams embed these assistants into daily workflows, the attack surface expands dramatically.

Detection Challenges for Security Teams

Traditional defenses flag known C2 domains, suspicious outbound connections, or odd DNS queries. An AI‑driven channel, however, looks like legitimate HTTPS traffic to a Microsoft or xAI domain on port 443. Detecting it requires deeper inspection of conversational content or behavioral analytics that can spot unusual request‑response patterns.

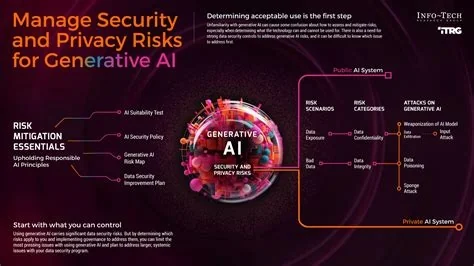

Mitigation Strategies for Defenders

- Enforce authentication for all AI‑assistant usage and limit web‑browsing features to vetted accounts.

- Implement outbound filtering that blocks non‑essential AI endpoints or routes traffic through a proxy capable of inspecting request payloads.

- Update security policies to treat any outbound AI request as potentially suspicious until you verify the user and purpose.

- Educate users that a simple chatbot query can become an infection vector; awareness training should cover this new risk.

Expert Insights

“Seeing AI assistants used as C2 proxies changes the threat landscape overnight,” says a senior analyst involved in the research. “We’ve always treated browsers and email clients as high‑risk, but now a legitimate AI service can be the conduit.”

Another security engineer notes, “Our SIEM now flags any AI‑assistant domain accessed from a non‑interactive session. Ignoring that noise would be reckless.”

The takeaway is clear: as AI assistants become embedded in daily workflows, they also become attractive footholds for adversaries. By auditing AI usage, applying strict access controls, and keeping users informed, you can keep these assistants on your side rather than in the hands of attackers.