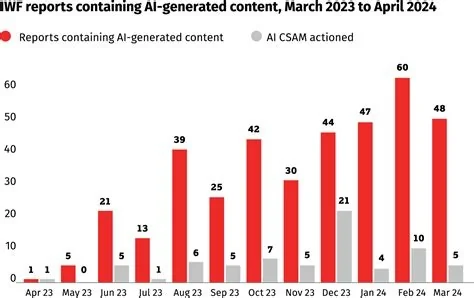

AI‑generated child sexual abuse material has exploded, with reports showing a rise of more than 26,000 percent in a single year. The surge threatens child safety worldwide, prompting urgent calls from the United Nations, national regulators, and advocacy groups for stronger safeguards, stricter enforcement, and comprehensive AI‑impact assessments.

Scale of the AI‑Generated CSAM Surge

Statistical Explosion

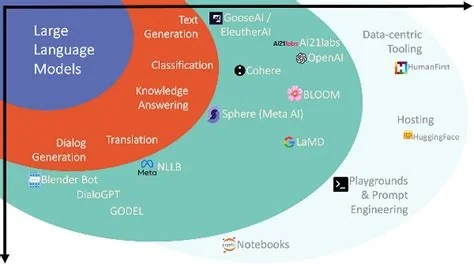

The latest data reveal that AI‑created videos depicting child sexual abuse increased dramatically, with over half classified in the most severe “Category A” tier. This unprecedented growth highlights the ease with which large‑scale generative models can produce realistic illegal content with minimal human input.

Regulatory and Legal Responses

U.S. State Attorney General Investigations

State attorneys general across the United States have intensified enforcement actions targeting AI‑generated sexual content. Several states have launched formal inquiries into platforms responsible for mass‑producing illegal imagery, emphasizing the need for accountability and rapid legal scrutiny.

International Policy Momentum

Beyond the United States, multiple countries are preparing new regulations to protect minors online. Nations such as Malaysia, the United Kingdom, France, and Canada are evaluating frameworks that could mirror existing bans on social‑media accounts for children under sixteen, aiming to curb exposure to harmful AI‑generated material.

Advocacy and Ethical Pressure

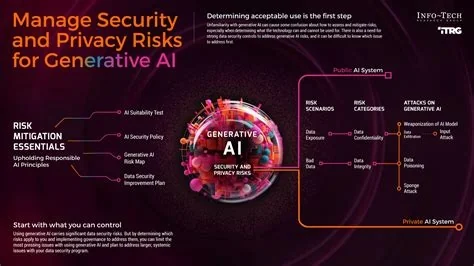

Advocacy organizations are amplifying calls for corporate responsibility, arguing that the rapid proliferation of AI‑generated abuse reflects systemic ethical failures. They demand stronger content‑moderation standards, mandatory AI‑impact assessments, and increased AI literacy for parents, educators, and policymakers.

Technical Challenges for Content Moderation

Current moderation tools struggle to keep pace with the volume and sophistication of AI‑generated media. Hyper‑realistic depictions blur the line between synthetic and actual abuse, complicating detection, classification, and law‑enforcement investigations.

Future Outlook and Required Actions

Without coordinated, decisive action, AI‑enabled child sexual abuse content is likely to continue expanding. The United Nations urges immediate implementation of protective measures, while national authorities pursue legal scrutiny of offending platforms. Effective regulation will require technical training for policymakers, robust AI‑impact assessments, and enforceable standards that safeguard children and restore public trust in emerging technologies.