Parliamentary committees in the UK, New Zealand and other jurisdictions are raising alarms over the unclear copyright status of AI‑generated content. Lawmakers argue that without clear authorship rules, advertisers, platforms and creators risk infringement liability. The growing wave of AI‑driven ads, news and social posts highlights the urgent need for legislative clarity.

Why Parliaments Are Scrutinizing AI‑Generated Works

Legislators have observed a surge in AI‑produced advertising, news articles and social‑media posts. They warn that the lack of definitive authorship standards could expose businesses to lawsuits for using copyrighted material embedded in training data. Clear rules are needed to determine who owns AI‑generated outputs and how underlying works are protected.

Legal Backdrop: Human Input Requirement

U.S. copyright guidance emphasizes that AI‑generated outputs qualify for protection only when a human provides sufficient creative input. This standard, applied in recent court decisions, means fully autonomous creations—such as a text‑only advertisement drafted by an AI without any human editing—remain uncopyrighted under current U.S. law.

Litigation Testing the Limits

More than fifty lawsuits across the United States and the European Union are challenging the legality of using copyrighted works as training data. Key disputes focus on whether such use constitutes infringement and who, if anyone, can claim ownership of the resulting AI‑generated content.

- Image‑rights holders allege that AI developers trained models on millions of protected images without permission.

- Authors claim that language‑model outputs reproduce protected text without proper licensing.

- Courts are evaluating the balance between data‑driven innovation and existing copyright protections.

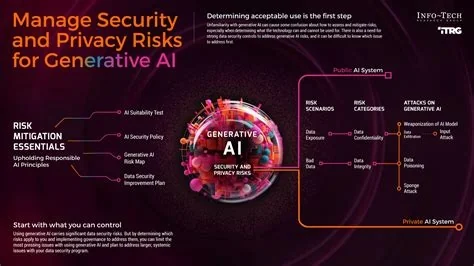

Transparency and Data Provenance as Mitigation

Experts recommend that AI developers maintain detailed provenance logs, embed metadata in generated outputs, and adopt watermarking or blockchain‑based tracking. These practices help platforms and rights holders quickly identify infringing material and support efficient takedown or licensing negotiations.

Parliamentary Responses Worldwide

In the United Kingdom, a House of Commons committee is commissioning expert testimony, reviewing copyright statutes, and considering amendments to clarify authorship and liability for AI‑generated works. New Zealand’s parliament has announced an inquiry into AI and intellectual property, mirroring the UK approach and signaling growing global attention.

Implications for the Tech Industry

Advertisers, media companies and AI platform providers face heightened compliance risk. Companies may need to audit training data pipelines, secure licenses for copyrighted material, and implement robust attribution mechanisms to avoid injunctions, takedown notices or costly settlements. Conversely, clearer rules could unlock new licensing opportunities, creating revenue streams for rights holders while giving AI firms a defensible data foundation.

Conclusion

Parliamentary bodies across the globe are converging on the same conclusion: existing copyright frameworks are ill‑suited to the realities of generative AI. By drawing on legal analyses, ongoing litigation and emerging best‑practice recommendations, legislators aim to craft policies that protect creators without stifling technological progress. The coming years will likely see a patchwork of national reforms, but the momentum toward harmonized, transparent rules for AI‑generated content is unmistakable.