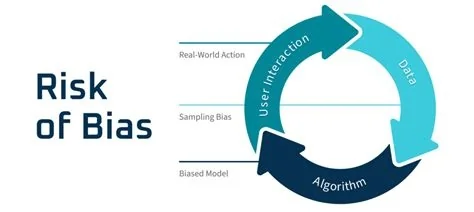

Generative AI systems now face a systemic bias risk that threatens trust across digital services. The bias stems from both data and human interaction, creating feedback loops that amplify prejudice. Organizations must treat AI data pipelines with the same rigor as software pipelines, implementing zero‑trust controls, provenance tracking, and regular bias audits to safeguard fairness and security.

Understanding Systemic Bias in Generative AI

Human Influence on Model Outputs

Users’ cognitive shortcuts, emotional influences, and social pressures shape prompts, interpret results, and feed feedback into models. This two‑way loop can reinforce existing prejudices even when training data appear well‑curated, turning subtle bias into a pervasive issue.

Risks of Unverified AI‑Generated Content

Unverified AI outputs become a primary source of accidental data exposure. When organizations accept “good‑enough” content without verification, subtle biases accumulate across applications such as hiring tools, recommendation engines, and automated customer service, degrading decision quality at scale.

Zero‑Trust Data Governance for AI

Adopting a Zero‑Trust Posture

Zero‑trust treats all AI‑generated data as unverified until proven trustworthy. This strategy enforces strict authentication, provenance tracking, and continuous monitoring of data flows from ingestion to model deployment, reducing the risk of biased or malicious content entering production.

Actionable Steps to Mitigate Bias

- Map the AI data‑supply chain – Identify every dataset, annotation process, and third‑party component that contributes to a generative system.

- Implement zero‑trust controls – Apply verification, access‑control, and audit mechanisms to AI‑generated artifacts before they enter workflows.

- Audit for cognitive bias – Conduct regular reviews of prompting practices and output evaluations to surface systematic distortions introduced by human operators.

Future Outlook

By embracing transparent data provenance and rigorous audit practices, organizations can transform AI from an unchecked amplifier of prejudice into a calibrated partner that upholds fairness and security. The shift toward zero‑trust, audit‑driven AI governance will be pivotal in restoring public confidence in generative technologies.