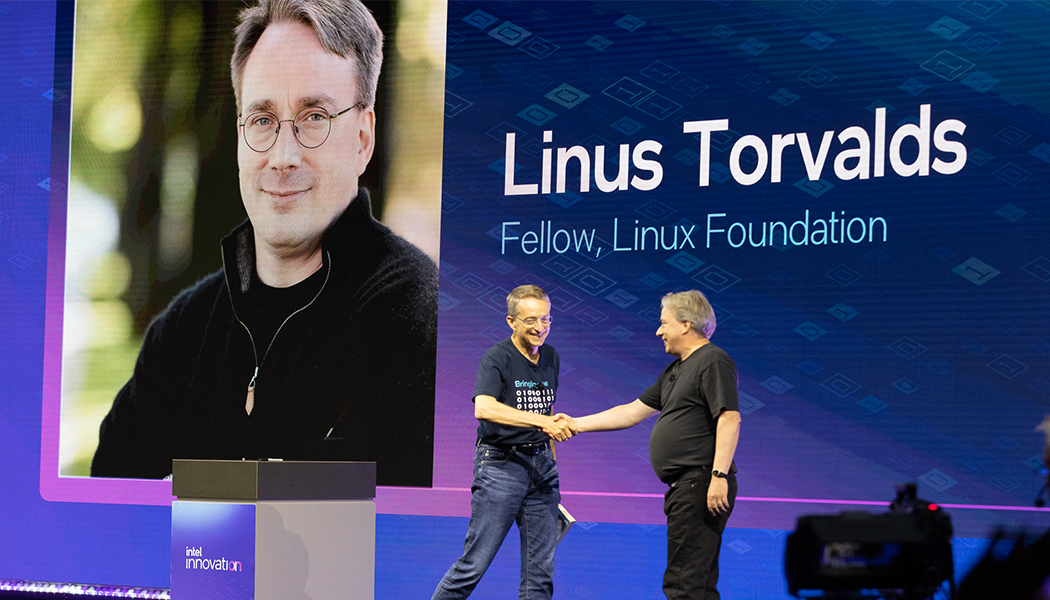

Over the winter holidays, the creator of Linux and Git, Linus Torvalds, quietly launched a new open‑source experiment called AudioNoise . What sets the project apart is not its functional ambition—an exploratory audio‑processing library—but the way it was kick‑started: Torvalds relied on “AI‑assisted” or “vibe coding” tools to draft large portions of the initial codebase. The approach has ignited a fresh round of debate across the open‑source community about the role of large language models (LLMs) in production‑grade software, especially in projects as critical as the Linux kernel.

What happened

According to a Phoronix report published yesterday, Torvalds spent his holiday downtime experimenting with an LLM‑driven workflow he dubbed *vibe coding*. The term, popularised by a handful of high‑profile developers, describes a loosely structured coding style where the AI generates code snippets that the programmer then refines, tests, and integrates. In the README of AudioNoise, Torvalds writes, “I let the model spin up a skeleton, then I iterate on it—much like a musical jam session.” The repository, posted on GitHub under an open‑source licence, already contains more than 2,000 lines of code, with commit messages explicitly crediting the AI for the initial drafts.

Background and context

Torvalds is no stranger to experimenting with new tools. Over the past few years, he has publicly commented on the growing presence of AI assistants in kernel development, describing them as “just another tool” that can accelerate repetitive tasks. His stance mirrors a broader trend: developers at companies such as Microsoft, Google, and GitHub have begun integrating LLMs like GitHub Copilot, Claude, and Gemini into daily workflows.

The phenomenon is not limited to hobby projects. Last year, Wikimedia developer Dmitry Brant documented using Claude Code to modernise a 25‑year‑old kernel driver, and The Register highlighted several instances where contributors employed AI to rewrite or audit kernel subsystems. These examples illustrate a gradual shift from curiosity to practical adoption, even in the most safety‑critical codebases.

The controversy

The enthusiastic reception of Torvalds’s experiment quickly met with sharp criticism. Techrights blogger Roy Schestowitz, in a post titled “‘Vice Coding’ is Not ‘AI’, It’s a Sewer, It Is Junk,” dismissed vibe coding as “code is a liability,” echoing a sentiment voiced by author Cory Doctorow. Critics argue that AI‑generated code can introduce subtle bugs, security vulnerabilities, and licensing ambiguities that are difficult to detect without rigorous human review.

Schestowitz also linked the debate to larger corporate dynamics, noting that Microsoft has been reclassifying products such as Office and GitHub under the “AI” banner to inflate perceived value amid financial scrutiny. The implication, according to detractors, is that the hype around AI may be masking deeper quality and accountability issues.

Torvalds, however, remains pragmatic. In a recent interview cited by Linux‑related outlets, he said, “If the tool can write a loop that compiles and passes tests, I’m happy to keep it. The real work is in understanding why it works and making sure it doesn’t break anything later.” He added that the AI’s output is always treated as a draft, subject to the same peer‑review standards that govern all kernel contributions.

Implications for the open‑source ecosystem

Torvalds’s public endorsement of vibe coding carries weight. As the figurehead of one of the world’s most successful open‑source projects, his willingness to experiment may encourage other maintainers to experiment with LLMs, potentially accelerating development cycles. At the same time, the backlash underscores the need for robust governance frameworks.

In practical terms, the debate is prompting several projects to draft policies around AI‑generated contributions. The Linux kernel community, for instance, has begun discussing mandatory provenance tags for any patch that originates from an LLM, as well as automated static‑analysis pipelines tailored to detect AI‑specific anti‑patterns.

From a legal perspective, the open‑source licences of AI‑generated code remain a gray area. While most LLM providers claim that their outputs are not subject to copyright, the integration of such code into GPL‑licensed projects could raise questions about derivative works and attribution.

Looking ahead

AudioNoise itself is unlikely to become a cornerstone of the audio‑processing landscape, but its real significance lies in the narrative it creates. By openly documenting his vibe‑coding workflow, Torvalds offers a rare glimpse into how a seasoned engineer balances human intuition with machine assistance.

Whether the practice will become a mainstream development paradigm depends on how the community reconciles speed with safety, and how quickly tooling evolves to provide better verification of AI‑produced code. As AI models grow more capable, the line between “assistant” and “author” may blur, forcing the open‑source world to redefine notions of authorship, liability, and quality control.

For now, the conversation sparked by Torvalds’s holiday project is a microcosm of an industry at a crossroads: embrace the efficiency of AI‑driven coding, or double down on traditional, human‑only craftsmanship. The outcome will shape not just the future of Linux, but the broader trajectory of software development in the AI era.

This topic is currently trending in Technology.